问题现象

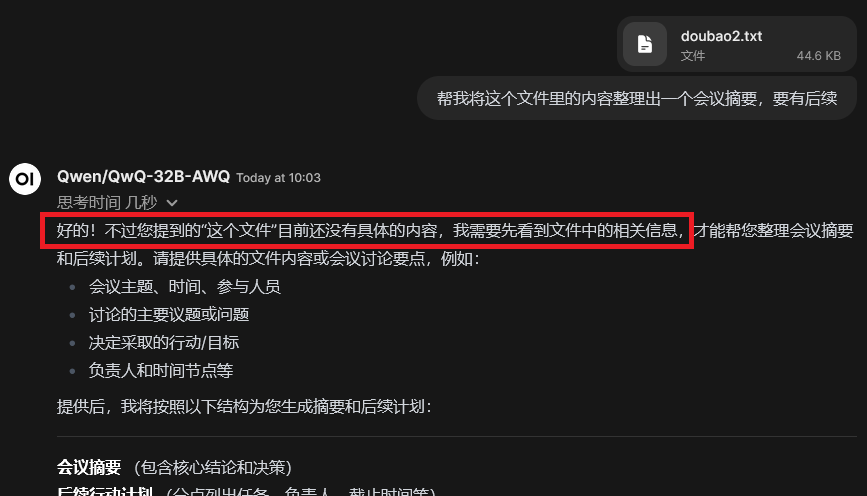

在Open WebUI里上传一个附件,然后针对这个附件做聊天或者问答,但是返回异常。

查看后台日志,有如下报错:

WARNI [python_multipart.multipart] Skipping data after last boundary

INFO [open_webui.routers.files] file.content_type: text/plain

INFO [open_webui.routers.retrieval] save_docs_to_vector_db: document doubao2.txt file-16bc9379-b8ff-4884-ba51-166efbd67e1a

Collection file-16bc9379-b8ff-4884-ba51-166efbd67e1a does not exist.

INFO [open_webui.routers.retrieval] adding to collection file-16bc9379-b8ff-4884-ba51-166efbd67e1a

ERROR [open_webui.routers.retrieval] 'NoneType' object has no attribute 'encode'

Traceback (most recent call last):

File "/root/miniconda3/lib/python3.12/site-packages/open_webui/routers/retrieval.py", line 784, in save_docs_to_vector_db

embeddings = embedding_function(

^^^^^^^^^^^^^^^^^^^

File "/root/miniconda3/lib/python3.12/site-packages/open_webui/retrieval/utils.py", line 265, in <lambda>

return lambda query, user=None: embedding_function.encode(query).tolist()

^^^^^^^^^^^^^^^^^^^^^^^^^

AttributeError: 'NoneType' object has no attribute 'encode'

ERROR [open_webui.routers.retrieval] 'NoneType' object has no attribute 'encode'问题分析

从后台的错误上看应该是save_docs_to_vector_db的embedding_functionb出错了。

再具体看了一下代码应该是没的安装语义向量模型引擎sentence-transformers导致的。

解决方案

切回到前台的Open WebUI,用管理员身份登录进去。然后点右上角的“设置”,并找到“文档”,然后在该界面上点一下右这的下载的图标,如下图所示。

若下载成功了,应该就OK了。不过,实际上我这边的情况是下载失败。所以同志还要努力。继续往下走。

文档设置中下载sentence-transformers/all-MiniLM-L6-v2失败

查看后台日志,发现报错如下

ERROR [open_webui.retrieval.utils] Cannot determine model snapshot path: Cannot find an appropriate cached snapshot folder for the specified revision on the local disk and outgoing traffic has been disabled. To enable repo look-ups and downloads online, pass 'local_files_only=False' as input.

Traceback (most recent call last):

File "/root/miniconda3/lib/python3.12/site-packages/open_webui/retrieval/utils.py", line 425, in get_model_path

model_repo_path = snapshot_download(**snapshot_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/miniconda3/lib/python3.12/site-packages/huggingface_hub/utils/_validators.py", line 114, in _inner_fn

return fn(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^

File "/root/miniconda3/lib/python3.12/site-packages/huggingface_hub/_snapshot_download.py", line 219, in snapshot_download

raise LocalEntryNotFoundError(

huggingface_hub.errors.LocalEntryNotFoundError: Cannot find an appropriate cached snapshot folder for the specified revision on the local disk and outgoing traffic has been disabled. To enable repo look-ups and downloads online, pass 'local_files_only=False' as input.

WARNI [sentence_transformers.SentenceTransformer] No sentence-transformers model found with name sentence-transformers/all-MiniLM-L6-v2. Creating a new one with mean pooling.

INFO: 127.0.0.1:56474 - "POST /api/v1/retrieval/embedding/update HTTP/1.1" 200 OK从这个报错来看,应该是open_webui/retrieval/utils.py的get_model_path()在调用snapshot_download()连不上huggingface导致的。因此,我们需要再设置一下代码,翻个墙,或者设置一下huggingface的镜像站。

后台关了Open WebUI,然后设置一下代理及huggingface镜像。

设置代理

import subprocess

import os

result = subprocess.run('bash -c "source /etc/network_turbo && env | grep proxy"', shell=True, capture_output=True, text=True)

output = result.stdout

for line in output.splitlines():

if '=' in line:

var, value = line.split('=', 1)

os.environ[var] = value设置huggingface镜像

export HF_ENDPOINT=https://hf-mirror.com

重启 Open WebUI

./start.sh 后再chat with 附件,一切正常。

以下是我的start.sh的代码:

#!/usr/bin/env bash

# open-webui 不原生支持HOST和PORT环境变量,需手动传递参数

# https://docs.openwebui.com/getting-started/env-configuration/#port

# 若open-webui运行异常,可执行`rm -rf ./data`清除数据后重启服务并清理浏览器缓存

# https://docs.openwebui.com/getting-started/env-configuration/

export DATA_DIR="$(pwd)/data"

export ENABLE_OLLAMA_API=False

export ENABLE_OPENAI_API=True

export OPENAI_API_KEY="dont_change_this_cuz_openai_is_the_mcdonalds_of_ai"

export OPENAI_API_BASE_URL="http://127.0.0.1:8000/v1" # <--- 需与ktransformers/llama.cpp的API配置匹配

#export DEFAULT_MODELS="openai/foo/bar" # <--- 保留注释,此参数用于`litellm`接入

export WEBUI_AUTH=True

export DEFAULT_USER_ROLE="admin"

export HOST=127.0.0.1

export PORT=3000

# 如果仅加载了R1模型,可通过禁用以下功能节省时间:

# * 标签生成

# * 自动补全

# * 标题生成

# https://github.com/kvcache-ai/ktransformers/issues/618#issuecomment-2681381587

export ENABLE_TAGS_GENERATION=False

export ENABLE_AUTOCOMPLETE_GENERATION=False

# 或许目前需手动在界面禁用该功能???

export TITLE_GENERATION_PROMPT_TEMPLATE=""

open-webui serve \

--host $HOST \

--port $PORT