一开始报没安装FlashInfer

启动vllm过程中有一个warning。

[topk_topp_sampler.py:63] FlashInfer is not available. Falling back to the PyTorch-native implementation of top-p & top-k sampling. For the best performance, please install FlashInfer.那就安装一下FlashInfer

pip install flashinfo-python- 安装了后又报FlashInfer>=v0.2.3不支持向下兼容

还是有warning,只是变了个样子,然后warning里也没说应该用哪一个版本。

[topk_topp_sampler.py:38] Currently, FlashInfer top-p & top-k sampling sampler is disabled because FlashInfer>=v0.2.3 is not backward compatible. Falling back to the PyTorch-native implementation of top-p & top-k sampling.- 查代码 topk_topp_sampler.py 第38行,

代码文件位置:vllm/vllm/v1/sample/ops/topk_topp_sampler.py

class TopKTopPSampler(nn.Module):

def __init__(self):

super().__init__()

if current_platform.is_cuda():

if is_flashinfer_available:

flashinfer_version = flashinfer.__version__

if flashinfer_version >= "0.2.3":

# FIXME(DefTruth): Currently, we have errors when using

# FlashInfer>=v0.2.3 for top-p & top-k sampling. As a

# workaround, we disable FlashInfer for top-p & top-k

# sampling by default while FlashInfer>=v0.2.3.

# The sampling API removes the success return value

# of all sampling API, which is not compatible with

# earlier design.

# https://github.com/flashinfer-ai/flashinfer/releases/

# tag/v0.2.3

logger.info(

"Currently, FlashInfer top-p & top-k sampling sampler "

"is disabled because FlashInfer>=v0.2.3 is not "

"backward compatible. Falling back to the PyTorch-"

"native implementation of top-p & top-k sampling.")

self.forward = self.forward_native

elif envs.VLLM_USE_FLASHINFER_SAMPLER is not False:

# NOTE(woosuk): The V0 sampler doesn't use FlashInfer for

# sampling unless VLLM_USE_FLASHINFER_SAMPLER=1 (i.e., by

# default it is unused). For backward compatibility, we set

# `VLLM_USE_FLASHINFER_SAMPLER` as None by default and

# interpret it differently in V0 and V1 samplers: In V0,

# None means False, while in V1, None means True. This is

# why we use the condition

# `envs.VLLM_USE_FLASHINFER_SAMPLER is not False` here.

logger.info("Using FlashInfer for top-p & top-k sampling.")

self.forward = self.forward_cuda

else:

logger.warning(

"FlashInfer is available, but it is not enabled. "

"Falling back to the PyTorch-native implementation of "

"top-p & top-k sampling. For the best performance, "

"please set VLLM_USE_FLASHINFER_SAMPLER=1.")

self.forward = self.forward_native

else:

logger.warning(

"FlashInfer is not available. Falling back to the PyTorch-"

"native implementation of top-p & top-k sampling. For the "

"best performance, please install FlashInfer.")

self.forward = self.forward_native从这个代码上看,应该只要不是0.2.3就可以了。

卸载flashinfer-python

pip uninstall flashinfer-python安装一个老一点的0.2.2

pip install flashinfer-python==0.2.2重新启动server

终于启用了flashinfer!

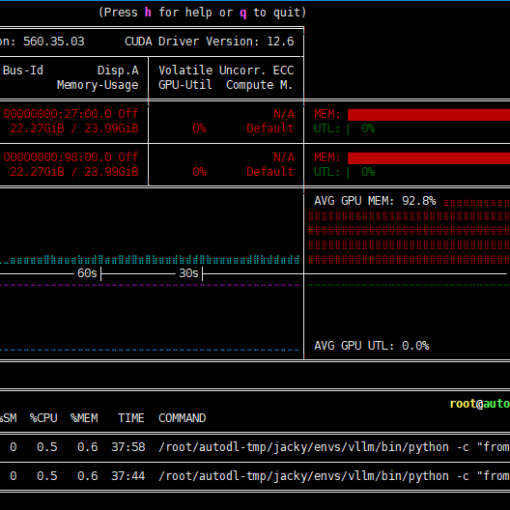

(VllmWorker rank=0 pid=13950) INFO 03-26 15:01:52 [topk_topp_sampler.py:53] Using FlashInfer for top-p & top-k sampling.但是依旧报警告:TORCH_CUDA_ARCH_LIST is not set

(VllmWorker rank=0 pid=13950) /root/autodl-tmp/jacky/envs/vllm/lib/python3.12/site-packages/torch/utils/cpp_extension.py:2059: UserWarning: TORCH_CUDA_ARCH_LIST is not set, all archs for visible cards are included for compilation.

(VllmWorker rank=0 pid=13950) If this is not desired, please set os.environ['TORCH_CUDA_ARCH_LIST'].指定TORCH_CUDA_ARCH_LIST为8.9

我用的是4090,所以TORCH_CUDA_ARCH_LIST应该是8.9

export TORCH_CUDA_ARCH_LIST="8.9"重新启动server

成功!

One thought on “vllm 优化:flashinfer问题”